Find Area Where Solution Exists and Is Unique

Differential equation containing derivatives with respect to only one variable

In mathematics, an ordinary differential equation (ODE) is a differential equation containing one or more functions of one independent variable and the derivatives of those functions.[1] The term ordinary is used in contrast with the term partial differential equation which may be with respect to more than one independent variable.[2]

Differential equations [edit]

A linear differential equation is a differential equation that is defined by a linear polynomial in the unknown function and its derivatives, that is an equation of the form

where , ..., and are arbitrary differentiable functions that do not need to be linear, and are the successive derivatives of the unknown function y of the variable x.

Among ordinary differential equations, linear differential equations play a prominent role for several reasons. Most elementary and special functions that are encountered in physics and applied mathematics are solutions of linear differential equations (see Holonomic function). When physical phenomena are modeled with non-linear equations, they are generally approximated by linear differential equations for an easier solution. The few non-linear ODEs that can be solved explicitly are generally solved by transforming the equation into an equivalent linear ODE (see, for example Riccati equation).

Some ODEs can be solved explicitly in terms of known functions and integrals. When that is not possible, the equation for computing the Taylor series of the solutions may be useful. For applied problems, numerical methods for ordinary differential equations can supply an approximation of the solution.

Background [edit]

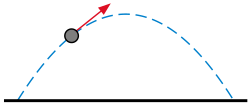

The trajectory of a projectile launched from a cannon follows a curve determined by an ordinary differential equation that is derived from Newton's second law.

Ordinary differential equations (ODEs) arise in many contexts of mathematics and social and natural sciences. Mathematical descriptions of change use differentials and derivatives. Various differentials, derivatives, and functions become related via equations, such that a differential equation is a result that describes dynamically changing phenomena, evolution, and variation. Often, quantities are defined as the rate of change of other quantities (for example, derivatives of displacement with respect to time), or gradients of quantities, which is how they enter differential equations.

Specific mathematical fields include geometry and analytical mechanics. Scientific fields include much of physics and astronomy (celestial mechanics), meteorology (weather modeling), chemistry (reaction rates),[3] biology (infectious diseases, genetic variation), ecology and population modeling (population competition), economics (stock trends, interest rates and the market equilibrium price changes).

Many mathematicians have studied differential equations and contributed to the field, including Newton, Leibniz, the Bernoulli family, Riccati, Clairaut, d'Alembert, and Euler.

A simple example is Newton's second law of motion — the relationship between the displacement x and the time t of an object under the force F, is given by the differential equation

which constrains the motion of a particle of constant mass m. In general, F is a function of the position x(t) of the particle at time t. The unknown function x(t) appears on both sides of the differential equation, and is indicated in the notation F(x(t)).[4] [5] [6] [7]

Definitions [edit]

In what follows, let y be a dependent variable and x an independent variable, and y = f(x) is an unknown function of x. The notation for differentiation varies depending upon the author and upon which notation is most useful for the task at hand. In this context, the Leibniz's notation ( dy / dx , d 2 y / dx 2 , …, d n y / dx n ) is more useful for differentiation and integration, whereas Lagrange's notation (y′, y′′, …, y (n)) is more useful for representing derivatives of any order compactly, and Newton's notation is often used in physics for representing derivatives of low order with respect to time.

General definition [edit]

Given F, a function of x, y, and derivatives of y. Then an equation of the form

is called an explicit ordinary differential equation of order n.[8] [9]

More generally, an implicit ordinary differential equation of order n takes the form:[10]

There are further classifications:

- Autonomous

- A differential equation not depending on x is called autonomous.

- Linear

- A differential equation is said to be linear if F can be written as a linear combination of the derivatives of y:

- Homogeneous

- If r(x) = 0, and consequently one "automatic" solution is the trivial solution, y = 0. The solution of a linear homogeneous equation is a complementary function, denoted here by yc .

- Nonhomogeneous (or inhomogeneous)

- If r(x) ≠ 0. The additional solution to the complementary function is the particular integral, denoted here by yp .

- Non-linear

- A differential equation that cannot be written in the form of a linear combination.

System of ODEs [edit]

A number of coupled differential equations form a system of equations. If y is a vector whose elements are functions; y(x) = [y 1(x), y 2(x),..., ym (x)], and F is a vector-valued function of y and its derivatives, then

is an explicit system of ordinary differential equations of order n and dimension m. In column vector form:

These are not necessarily linear. The implicit analogue is:

where 0 = (0, 0, ..., 0) is the zero vector. In matrix form

For a system of the form , some sources also require that the Jacobian matrix be non-singular in order to call this an implicit ODE [system]; an implicit ODE system satisfying this Jacobian non-singularity condition can be transformed into an explicit ODE system. In the same sources, implicit ODE systems with a singular Jacobian are termed differential algebraic equations (DAEs). This distinction is not merely one of terminology; DAEs have fundamentally different characteristics and are generally more involved to solve than (nonsingular) ODE systems.[14] [15] [16] Presumably for additional derivatives, the Hessian matrix and so forth are also assumed non-singular according to this scheme,[ citation needed ] although note that any ODE of order greater than one can be (and usually is) rewritten as system of ODEs of first order,[17] which makes the Jacobian singularity criterion sufficient for this taxonomy to be comprehensive at all orders.

The behavior of a system of ODEs can be visualized through the use of a phase portrait.

Solutions [edit]

Given a differential equation

a function u: I ⊂ R → R , where I is an interval, is called a solution or integral curve for F, if u is n-times differentiable on I, and

Given two solutions u: J ⊂ R → R and v: I ⊂ R → R , u is called an extension of v if I ⊂ J and

A solution that has no extension is called a maximal solution. A solution defined on all of R is called a global solution.

A general solution of an nth-order equation is a solution containing n arbitrary independent constants of integration. A particular solution is derived from the general solution by setting the constants to particular values, often chosen to fulfill set 'initial conditions or boundary conditions'.[18] A singular solution is a solution that cannot be obtained by assigning definite values to the arbitrary constants in the general solution.[19]

In the context of linear ODE, the terminology particular solution can also refer to any solution of the ODE (not necessarily satisfying the initial conditions), which is then added to the homogeneous solution (a general solution of the homogeneous ODE), which then forms a general solution of the original ODE. This is the terminology used in the guessing method section in this article, and is frequently used when discussing the method of undetermined coefficients and variation of parameters.

Theories [edit]

Singular solutions [edit]

The theory of singular solutions of ordinary and partial differential equations was a subject of research from the time of Leibniz, but only since the middle of the nineteenth century has it received special attention. A valuable but little-known work on the subject is that of Houtain (1854). Darboux (from 1873) was a leader in the theory, and in the geometric interpretation of these solutions he opened a field worked by various writers, notably Casorati and Cayley. To the latter is due (1872) the theory of singular solutions of differential equations of the first order as accepted circa 1900.

Reduction to quadratures [edit]

The primitive attempt in dealing with differential equations had in view a reduction to quadratures. As it had been the hope of eighteenth-century algebraists to find a method for solving the general equation of the nth degree, so it was the hope of analysts to find a general method for integrating any differential equation. Gauss (1799) showed, however, that complex differential equations require complex numbers. Hence, analysts began to substitute the study of functions, thus opening a new and fertile field. Cauchy was the first to appreciate the importance of this view. Thereafter, the real question was no longer whether a solution is possible by means of known functions or their integrals, but whether a given differential equation suffices for the definition of a function of the independent variable or variables, and, if so, what are the characteristic properties.

Fuchsian theory [edit]

Two memoirs by Fuchs[20] inspired a novel approach, subsequently elaborated by Thomé and Frobenius. Collet was a prominent contributor beginning in 1869. His method for integrating a non-linear system was communicated to Bertrand in 1868. Clebsch (1873) attacked the theory along lines parallel to those in his theory of Abelian integrals. As the latter can be classified according to the properties of the fundamental curve that remains unchanged under a rational transformation, Clebsch proposed to classify the transcendent functions defined by differential equations according to the invariant properties of the corresponding surfaces f = 0 under rational one-to-one transformations.

Lie's theory [edit]

From 1870, Sophus Lie's work put the theory of differential equations on a better foundation. He showed that the integration theories of the older mathematicians can, using Lie groups, be referred to a common source, and that ordinary differential equations that admit the same infinitesimal transformations present comparable integration difficulties. He also emphasized the subject of transformations of contact.

Lie's group theory of differential equations has been certified, namely: (1) that it unifies the many ad hoc methods known for solving differential equations, and (2) that it provides powerful new ways to find solutions. The theory has applications to both ordinary and partial differential equations.[21]

A general solution approach uses the symmetry property of differential equations, the continuous infinitesimal transformations of solutions to solutions (Lie theory). Continuous group theory, Lie algebras, and differential geometry are used to understand the structure of linear and nonlinear (partial) differential equations for generating integrable equations, to find its Lax pairs, recursion operators, Bäcklund transform, and finally finding exact analytic solutions to DE.

Symmetry methods have been applied to differential equations that arise in mathematics, physics, engineering, and other disciplines.

Sturm–Liouville theory [edit]

Sturm–Liouville theory is a theory of a special type of second order linear ordinary differential equation. Their solutions are based on eigenvalues and corresponding eigenfunctions of linear operators defined via second-order homogeneous linear equations. The problems are identified as Sturm-Liouville Problems (SLP) and are named after J.C.F. Sturm and J. Liouville, who studied them in the mid-1800s. SLPs have an infinite number of eigenvalues, and the corresponding eigenfunctions form a complete, orthogonal set, which makes orthogonal expansions possible. This is a key idea in applied mathematics, physics, and engineering.[22] SLPs are also useful in the analysis of certain partial differential equations.

Existence and uniqueness of solutions [edit]

There are several theorems that establish existence and uniqueness of solutions to initial value problems involving ODEs both locally and globally. The two main theorems are

-

Theorem Assumption Conclusion Peano existence theorem F continuous local existence only Picard–Lindelöf theorem F Lipschitz continuous local existence and uniqueness

In their basic form both of these theorems only guarantee local results, though the latter can be extended to give a global result, for example, if the conditions of Grönwall's inequality are met.

Also, uniqueness theorems like the Lipschitz one above do not apply to DAE systems, which may have multiple solutions stemming from their (non-linear) algebraic part alone.[23]

Local existence and uniqueness theorem simplified [edit]

The theorem can be stated simply as follows.[24] For the equation and initial value problem:

if F and ∂F/∂y are continuous in a closed rectangle

in the x-y plane, where a and b are real (symbolically: a, b ∈ ℝ) and × denotes the cartesian product, square brackets denote closed intervals, then there is an interval

for some h ∈ ℝ where the solution to the above equation and initial value problem can be found. That is, there is a solution and it is unique. Since there is no restriction on F to be linear, this applies to non-linear equations that take the form F(x, y), and it can also be applied to systems of equations.

Global uniqueness and maximum domain of solution [edit]

When the hypotheses of the Picard–Lindelöf theorem are satisfied, then local existence and uniqueness can be extended to a global result. More precisely:[25]

For each initial condition (x 0, y 0) there exists a unique maximum (possibly infinite) open interval

such that any solution that satisfies this initial condition is a restriction of the solution that satisfies this initial condition with domain .

In the case that , there are exactly two possibilities

where Ω is the open set in which F is defined, and is its boundary.

Note that the maximum domain of the solution

- is always an interval (to have uniqueness)

- may be smaller than

- may depend on the specific choice of (x 0, y 0).

- Example.

This means that F(x, y) = y 2, which is C 1 and therefore locally Lipschitz continuous, satisfying the Picard–Lindelöf theorem.

Even in such a simple setting, the maximum domain of solution cannot be all since the solution is

which has maximum domain:

This shows clearly that the maximum interval may depend on the initial conditions. The domain of y could be taken as being but this would lead to a domain that is not an interval, so that the side opposite to the initial condition would be disconnected from the initial condition, and therefore not uniquely determined by it.

The maximum domain is not because

which is one of the two possible cases according to the above theorem.

Reduction of order [edit]

Differential equations can usually be solved more easily if the order of the equation can be reduced.

Reduction to a first-order system [edit]

Any explicit differential equation of order n,

can be written as a system of n first-order differential equations by defining a new family of unknown functions

for i = 1, 2,..., n. The n-dimensional system of first-order coupled differential equations is then

more compactly in vector notation:

where

Summary of exact solutions [edit]

Some differential equations have solutions that can be written in an exact and closed form. Several important classes are given here.

In the table below, P(x), Q(x), P(y), Q(y), and M(x,y), N(x,y) are any integrable functions of x, y, and b and c are real given constants, and C 1, C 2,... are arbitrary constants (complex in general). The differential equations are in their equivalent and alternative forms that lead to the solution through integration.

In the integral solutions, λ and ε are dummy variables of integration (the continuum analogues of indices in summation), and the notation ∫ x F(λ)dλ just means to integrate F(λ) with respect to λ, then after the integration substitute λ = x, without adding constants (explicitly stated).

Separable equations [edit]

-

Differential equation Solution method General solution First-order, separable in x and y (general case, see below for special cases)[26] Separation of variables (divide by P 2 Q 1). First-order, separable in x [24] Direct integration. First-order, autonomous, separable in y [24] Separation of variables (divide by F). First-order, separable in x and y [24] Integrate throughout.

General first-order equations [edit]

-

Differential equation Solution method General solution First-order, homogeneous[24] Set y = ux, then solve by separation of variables in u and x. First-order, separable[26] Separation of variables (divide by xy). If N = M, the solution is xy = C.

Exact differential, first-order[24] where

Integrate throughout. where Y(y) and X(x) are functions from the integrals rather than constant values, which are set to make the final function F(x, y) satisfy the initial equation.

Inexact differential, first-order[24] where

Integration factor μ(x, y) satisfying If μ(x, y) can be found:

General second-order equations [edit]

-

Differential equation Solution method General solution Second-order, autonomous[27] Multiply both sides of equation by 2dy/dx, substitute , then integrate twice.

Linear to the nth order equations [edit]

-

Differential equation Solution method General solution First-order, linear, inhomogeneous, function coefficients[24] Integrating factor: Armour formula: Second-order, linear, inhomogeneous, function coefficients Integrating factor: Second-order, linear, inhomogeneous, constant coefficients[28] Complementary function yc : assume yc = e αx , substitute and solve polynomial in α, to find the linearly independent functions . Particular integral yp : in general the method of variation of parameters, though for very simple r(x) inspection may work.[24]

If b 2 > 4c , then

If b 2 = 4c , then

If b 2 < 4c , then

nth-order, linear, inhomogeneous, constant coefficients[28] Complementary function yc : assume yc = e αx , substitute and solve polynomial in α, to find the linearly independent functions . Particular integral yp : in general the method of variation of parameters, though for very simple r(x) inspection may work.[24]

Since α j are the solutions of the polynomial of degree n: , then:

for α j all different,

for each root α j repeated kj times,

for some α j complex, then setting α = χ j + iγ j , and using Euler's formula, allows some terms in the previous results to be written in the form

where ϕ j is an arbitrary constant (phase shift).

The guessing method [edit]

When all other methods for solving an ODE fail, or in the cases where we have some intuition about what the solution to a DE might look like, it is sometimes possible to solve a DE simply by guessing the solution and validating it is correct. To use this method, we simply guess a solution to the differential equation, and then plug the solution into the differential equation to validate if it satisfies the equation. If it does then we have a particular solution to the DE, otherwise we start over again and try another guess. For instance we could guess that the solution to a DE has the form: since this is a very common solution that physically behaves in a sinusoidal way.

In the case of a first order ODE that is non-homogeneous we need to first find a DE solution to the homogeneous portion of the DE, otherwise known as the characteristic equation, and then find a solution to the entire non-homogeneous equation by guessing. Finally, we add both of these solutions together to obtain the total solution to the ODE, that is:

Software for ODE solving [edit]

- Maxima, an open-source computer algebra system.

- COPASI, a free (Artistic License 2.0) software package for the integration and analysis of ODEs.

- MATLAB, a technical computing application (MATrix LABoratory)

- GNU Octave, a high-level language, primarily intended for numerical computations.

- Scilab, an open source application for numerical computation.

- Maple, a proprietary application for symbolic calculations.

- Mathematica, a proprietary application primarily intended for symbolic calculations.

- SymPy, a Python package that can solve ODEs symbolically

- Julia (programming language), a high-level language primarily intended for numerical computations.

- SageMath, an open-source application that uses a Python-like syntax with a wide range of capabilities spanning several branches of mathematics.

- SciPy, a Python package that includes an ODE integration module.

- Chebfun, an open-source package, written in MATLAB, for computing with functions to 15-digit accuracy.

- GNU R, an open source computational environment primarily intended for statistics, which includes packages for ODE solving.

See also [edit]

- Boundary value problem

- Examples of differential equations

- Laplace transform applied to differential equations

- List of dynamical systems and differential equations topics

- Matrix differential equation

- Method of undetermined coefficients

- Recurrence relation

Notes [edit]

- ^ Dennis G. Zill (15 March 2012). A First Course in Differential Equations with Modeling Applications. Cengage Learning. ISBN978-1-285-40110-2. Archived from the original on 17 January 2020. Retrieved 11 July 2019.

- ^ "What is the origin of the term "ordinary differential equations"?". hsm.stackexchange.com. Stack Exchange. Retrieved 2016-07-28 .

- ^ Mathematics for Chemists, D.M. Hirst, Macmillan Press, 1976, (No ISBN) SBN: 333-18172-7

- ^ Kreyszig (1972, p. 64)

- ^ Simmons (1972, pp. 1, 2)

- ^ Halliday & Resnick (1977, p. 78)

- ^ Tipler (1991, pp. 78–83)

- ^ a b Harper (1976, p. 127)

- ^ Kreyszig (1972, p. 2)

- ^ Simmons (1972, p. 3)

- ^ a b Kreyszig (1972, p. 24)

- ^ Simmons (1972, p. 47)

- ^ Harper (1976, p. 128)

- ^ Kreyszig (1972, p. 12)

- ^ Ascher (1998, p. 12) harvtxt error: no target: CITEREFAscher1998 (help)

- ^ Achim Ilchmann; Timo Reis (2014). Surveys in Differential-Algebraic Equations II. Springer. pp. 104–105. ISBN978-3-319-11050-9.

- ^ Ascher (1998, p. 5) harvtxt error: no target: CITEREFAscher1998 (help)

- ^ Kreyszig (1972, p. 78)

- ^ Kreyszig (1972, p. 4)

- ^ Crelle, 1866, 1868

- ^ Lawrence (1999, p. 9) harvtxt error: no target: CITEREFLawrence1999 (help)

- ^ Logan, J. (2013). Applied mathematics (Fourth ed.).

- ^ Ascher (1998, p. 13) harvtxt error: no target: CITEREFAscher1998 (help)

- ^ a b c d e f g h i j Elementary Differential Equations and Boundary Value Problems (4th Edition), W.E. Boyce, R.C. Diprima, Wiley International, John Wiley & Sons, 1986, ISBN 0-471-83824-1

- ^ Boscain; Chitour 2011, p. 21

- ^ a b Mathematical Handbook of Formulas and Tables (3rd edition), S. Lipschutz, M. R. Spiegel, J. Liu, Schaum's Outline Series, 2009, ISC_2N 978-0-07-154855-7

- ^ Further Elementary Analysis, R. Porter, G.Bell & Sons (London), 1978, ISBN 0-7135-1594-5

- ^ a b Mathematical methods for physics and engineering, K.F. Riley, M.P. Hobson, S.J. Bence, Cambridge University Press, 2010, ISC_2N 978-0-521-86153-3

References [edit]

- Halliday, David; Resnick, Robert (1977), Physics (3rd ed.), New York: Wiley, ISBN0-471-71716-9

- Harper, Charlie (1976), Introduction to Mathematical Physics, New Jersey: Prentice-Hall, ISBN0-13-487538-9

- Kreyszig, Erwin (1972), Advanced Engineering Mathematics (3rd ed.), New York: Wiley, ISBN0-471-50728-8 .

- Polyanin, A. D. and V. F. Zaitsev, Handbook of Exact Solutions for Ordinary Differential Equations (2nd edition), Chapman & Hall/CRC Press, Boca Raton, 2003. ISBN 1-58488-297-2

- Simmons, George F. (1972), Differential Equations with Applications and Historical Notes, New York: McGraw-Hill, LCCN 75173716

- Tipler, Paul A. (1991), Physics for Scientists and Engineers: Extended version (3rd ed.), New York: Worth Publishers, ISBN0-87901-432-6

- Boscain, Ugo; Chitour, Yacine (2011), Introduction à l'automatique (PDF) (in French)

- Dresner, Lawrence (1999), Applications of Lie's Theory of Ordinary and Partial Differential Equations, Bristol and Philadelphia: Institute of Physics Publishing, ISBN978-0750305303

- Ascher, Uri; Petzold, Linda (1998), Computer Methods for Ordinary Differential Equations and Differential-Algebraic Equations, SIAM, ISBN978-1-61197-139-2

Bibliography [edit]

- Coddington, Earl A.; Levinson, Norman (1955). Theory of Ordinary Differential Equations . New York: McGraw-Hill.

- Hartman, Philip (2002) [1964], Ordinary differential equations, Classics in Applied Mathematics, 38, Philadelphia: Society for Industrial and Applied Mathematics, doi:10.1137/1.9780898719222, ISBN978-0-89871-510-1, MR 1929104

- W. Johnson, A Treatise on Ordinary and Partial Differential Equations, John Wiley and Sons, 1913, in University of Michigan Historical Math Collection

- Ince, Edward L. (1944) [1926], Ordinary Differential Equations, Dover Publications, New York, ISBN978-0-486-60349-0, MR 0010757

- Witold Hurewicz, Lectures on Ordinary Differential Equations, Dover Publications, ISBN 0-486-49510-8

- Ibragimov, Nail H. (1993). CRC Handbook of Lie Group Analysis of Differential Equations Vol. 1-3. Providence: CRC-Press. ISBN0-8493-4488-3. .

- Teschl, Gerald (2012). Ordinary Differential Equations and Dynamical Systems. Providence: American Mathematical Society. ISBN978-0-8218-8328-0.

- A. D. Polyanin, V. F. Zaitsev, and A. Moussiaux, Handbook of First Order Partial Differential Equations, Taylor & Francis, London, 2002. ISBN 0-415-27267-X

- D. Zwillinger, Handbook of Differential Equations (3rd edition), Academic Press, Boston, 1997.

External links [edit]

- "Differential equation, ordinary", Encyclopedia of Mathematics, EMS Press, 2001 [1994]

- EqWorld: The World of Mathematical Equations, containing a list of ordinary differential equations with their solutions.

- Online Notes / Differential Equations by Paul Dawkins, Lamar University.

- Differential Equations, S.O.S. Mathematics.

- A primer on analytical solution of differential equations from the Holistic Numerical Methods Institute, University of South Florida.

- Ordinary Differential Equations and Dynamical Systems lecture notes by Gerald Teschl.

- Notes on Diffy Qs: Differential Equations for Engineers An introductory textbook on differential equations by Jiri Lebl of UIUC.

- Modeling with ODEs using Scilab A tutorial on how to model a physical system described by ODE using Scilab standard programming language by Openeering team.

- Solving an ordinary differential equation in Wolfram|Alpha

Find Area Where Solution Exists and Is Unique

Source: https://en.wikipedia.org/wiki/Ordinary_differential_equation

![R=[x_{0}-a,x_{0}+a]\times [y_{0}-b,y_{0}+b]](https://wikimedia.org/api/rest_v1/media/math/render/svg/dfa448c4a13705b1df4c401c5ca9d11fc7c10735)

![I=[x_{0}-h,x_{0}+h]\subset [x_{0}-a,x_{0}+a]](https://wikimedia.org/api/rest_v1/media/math/render/svg/b231b296413a91fd03243fa6b0b15a1fc58684c1)

![{\displaystyle {\begin{cases}\mathbb {R} &y_{0}=0\\[4pt]\left(-\infty ,x_{0}+{\frac {1}{y_{0}}}\right)&y_{0}>0\\[4pt]\left(x_{0}+{\frac {1}{y_{0}}},+\infty \right)&y_{0}<0\end{cases}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8142f4985f0a70e5b2d7db31af3f8a6546c7b621)

![\ln(Cx)=\int ^{xy}{\frac {N(\lambda )\,d\lambda }{\lambda [N(\lambda )-M(\lambda )]}}\,\!](https://wikimedia.org/api/rest_v1/media/math/render/svg/9ab5454a27718de92dcaf72c85dc817e7b41d6ea)

![{\displaystyle y=e^{-\int ^{x}P(\lambda )\,d\lambda }\left[\int ^{x}e^{\int ^{\lambda }P(\varepsilon )\,d\varepsilon }Q(\lambda )\,d\lambda +C\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0161923c3b281e7f5d6d2c5c61e51e6c61f3e67d)

![{\displaystyle y=e^{-\int ^{x}P(\lambda )\,d\lambda }\left[\int ^{x}\left(\int ^{\xi }e^{\int ^{\lambda }P(\varepsilon )\,d\varepsilon }Q(\lambda )\,d\lambda \right)d\xi +C_{1}x+C_{2}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b111803be7ae071250bbfd01eaf62f114e454aae)

![{\displaystyle y_{c}=e^{-{\frac {bx}{2}}}\left[C_{1}\sin \left(x\,{\frac {\sqrt {4c-b^{2}}}{2}}\right)+C_{2}\cos \left(x\,{\frac {\sqrt {4c-b^{2}}}{2}}\right)\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5e53e6e17d2d3b8ca6aef9f6aacaae372efe9c3d)

0 Response to "Find Area Where Solution Exists and Is Unique"

Post a Comment